- #Gpower pearson correlation dependent r code#

- #Gpower pearson correlation dependent r download#

- #Gpower pearson correlation dependent r windows#

#Gpower pearson correlation dependent r code#

Independent component analysis (ICA) code.Canonical correlation analysis (CCA) code.Double-click on the setup file in this folder. Results Sample size tables for one correlation test are presented in Table 1 and Table 2. All calculations are based on the algorithm described by Guenther (1977) for calculating the cumulative correlation coefficient distribution.

This contains the GPower installation files. a:R 0 < R 1 or H a:R 0 > R 1 are for one-tailed test.Reversely, dz d 2(1r) d z d 2 ( 1 r), so with a r 0.9, a d of 1 would be a dz 2.24.

#Gpower pearson correlation dependent r download#

When your download is complete, open the. When there is a strong correlation between dependent variables, for example r 0.9, we get d dz2(1 0.9) d d z 2 ( 1 0.9), and a dz of 1 would be a d 0.45. Choose the appropriate link to begin your download.

#Gpower pearson correlation dependent r windows#

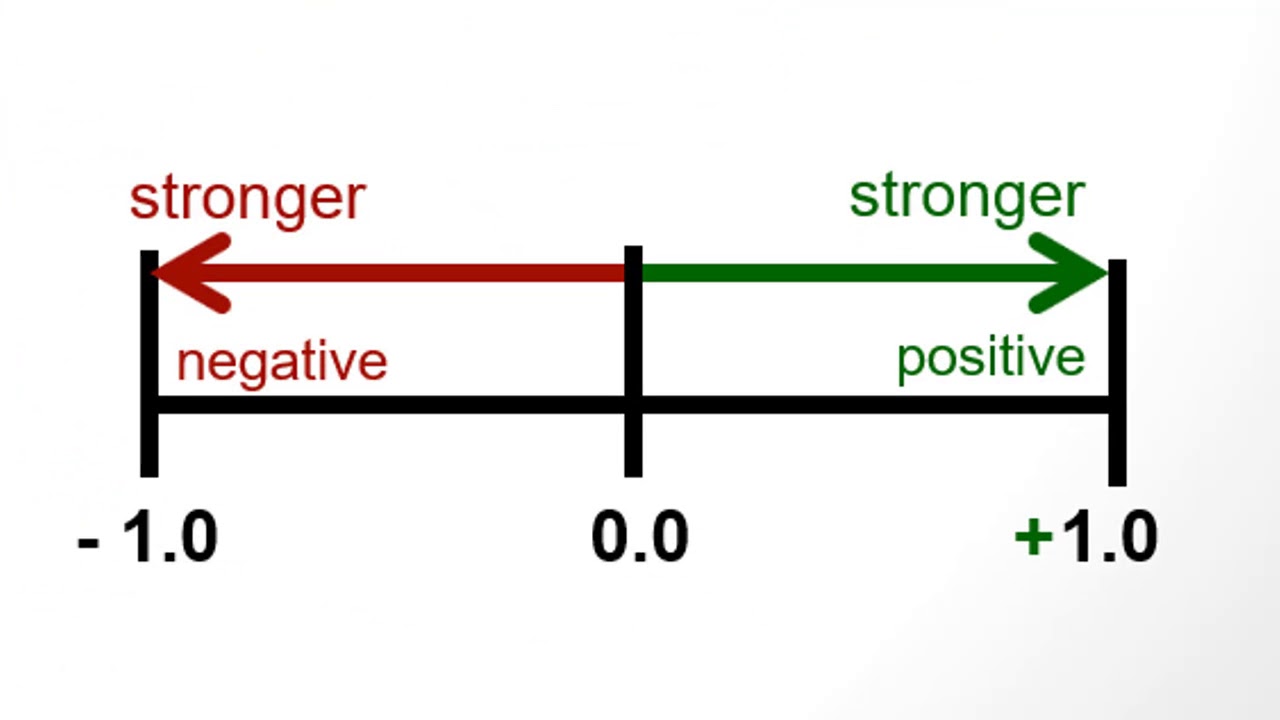

Principal component analysis (PCA) code Navigate to the GPower website Scroll down to the DOWNLOAD section There are two download options: Windows and Mac.Gg <- gg + geom_smooth(alpha=0.3, method="lm") Gg <- ggplot(data, aes(x, y, colour = category)) The Pearson correlation coefficient, sometimes known as Pearson’s r, is a statistic that determines how closely two variables are related. However, when we plot these x and y values on a chart, the relationship looks very different: library(ggplot2) To illustrate this, consider the following example: set.seed(150) This is incorrect, the Pearson correlation only measures the strength of the relationship between the two variables. r=0 there is no relation between the variable.Ī common misconception about the Pearson correlation is that it provides information on the slope of the relationship between the two variables being tested.Correlation is simply normalized covariation, and covariation measures how 2 random variables co-variate, that is, how change in one. Pearson, on other hand, defines correlation. The value of the correlation coefficient (r) lies between -1 to +1. Logistic regression works with both - continuous variables and categorical (encoded as dummy variables), so you can directly run logistic regression on your dataset. Interpretation of Pearson Correlation Coefficient Let’s import data into R or create some example data as follows: set.seed(150)įrom the above result, we get that Pearson’s correlation coefficient is 0.90, which indicates a strong correlation between x and y. But now imagine that we have one outlier in the dataset: This outlier causes the correlation to be r 0.878. Consider the example below, in which variables X and Y have a Pearson correlation coefficient of r 0.00. A correlation between variables means that as one variable’s value changes, the other tends to change in the same way. One extreme outlier can dramatically change a Pearson correlation coefficient. Its value ranges from -1 to +1, with 0 denoting no linear correlation, -1 denoting a perfect negative linear correlation, and +1 denoting a perfect positive linear correlation. The Pearson correlation coefficient, sometimes known as Pearson’s r, is a statistic that determines how closely two variables are related.

0 kommentar(er)

0 kommentar(er)